Jan van den Berg

Mar 24 ● 7 min read

This might get outdated real quick; a few words about AI.

AI is everywhere in the news, talking about how it's going to totally change our future. What really got me thinking was how this is going to affect us software engineers at SpringTree, and the whole industry in general.

So, I was listening to Dario Amodei, the CEO of Anthropic, on Lex Fridman's podcast.

He's saying programming is gonna be one of the fastest-changing fields because it's so tied to AI development. Apparently, AI models are getting crazy good at not just writing code, but also running it and understanding it. They went from handling like 3% to 50% of real programming tasks in just a few months! Amodei thinks they could be doing 90% by 2026 or 2027. Plus, he believes in the potential of AI-driven tools to enhance productivity by automating tedious tasks and identifying errors. For example, a project that hasn’t been touched in a while that needs to be updated to all the new standards, this is where AI can really help.

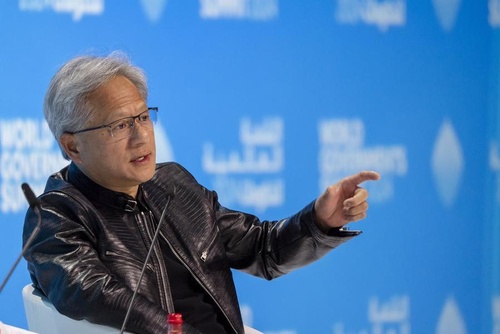

This perspective from Amodei initially gave me pause, but then there was Jensen Huang, the NVIDIA CEO, saying in Dubai this February that coding as a profession might be on its way out. He thinks AI's moving so fast that anyone wanting a tech career should learn something other than programming.

When I first heard that from Jensen Huang, I was super skeptical. I was thinking, "Yeah, just because you can boil an egg now, but that doesn't make you a chef." But honestly, AI's doing a lot more than just boiling eggs now. Especially Anthropic's Claude 3.7 Sonnet – it's turning into a pretty decent coding assistant. Like, it can whip up some pretty sophisticated "snacks" now, to keep with the analogy. Maybe not perfect the first time, but with a few tweaks, it's getting really useful. The big question is, will this progress keep going this fast?

Well, and I hope I don't sound too negative here, but I'm starting to see some cracks in the whole "just make neural networks bigger, feed them more data, give them more computing power, and they'll get smarter" idea. There was this article from Reuters that touched on it. The article highlights that simply scaling up large language models (LLMs) isn't always leading to proportionally better performance. Companies are finding that just throwing more data and computing power at the problem has its limits. Instead, there's a growing focus on developing new methods to enhance AI capabilities, such as improving reasoning abilities and more efficient data utilization. This includes exploring techniques like "chain of thought" prompting, which allows AI to break down complex problems step-by-step, similar to human reasoning. The search is on for more sustainable and effective ways to advance AI beyond just brute-force scaling.

So, will AI be cooking us full three-course meals anytime soon? If we're to believe guys like Amodei and Huang, and most of Silicon Valley, then yeah, probably. And probably sooner than we think.

Does that worry me? Honestly, yeah, a bit. But not for the reason you might think. I don't think there'll be less need for good software. I actually think there'll be more. Sure, some tasks will be automated, and AI will generate parts of products. But you'll always need someone who understands what the AI is doing, who can make sense of it, and fit it into the bigger picture. As a software engineer you are doing yourself a service to learn about AI, and the tools behind it, to complement and enhance your work, not to replace it.

AI won't magically transform someone into a software engineer, just as it can't make you a lawyer or a doctor. My deepest concern isn't about AI replacing professionals, but rather the illusion it creates. We risk a future where people believe they no longer need human expertise, assuming AI is a flawless, transparent solution. But AI, at its core, is often a 'black box' – its decision-making processes are opaque. What happens when something goes wrong? When an AI-generated legal document has a critical flaw, or an AI-diagnosed medical issue is incorrect? The fallout could be severe, and the realization of needing human oversight and judgment might come too late. This isn't a distant problem; it's a potential pitfall we need to address now.

The rapid advancements in AI are undeniable, and they present both exciting opportunities and significant challenges for software engineers. The question is not whether AI will change our profession, but how we will respond to that change. Our role is not just to write code, but to design systems, solve problems, and ensure that technology serves people in meaningful ways. As AI becomes more powerful, our responsibility to understand, guide, and oversee its use becomes even greater.

Sources:

Lex Fridman's podcast with Dario Amodei: https://www.youtube.com/watch?v=ugvHCXCOmm4

Jensen Huang's comments in Dubai: https://youtu.be/iUOrH2FJKfo?si=Ufgk5rNPX5YYzTg0&t=1110

Reuters article on AI limitations: https://www.reuters.com/technology/artificial-intelligence/openai-rivals-seek-new-path-smarter-ai-current-methods-hit-limitations-2024-11-11